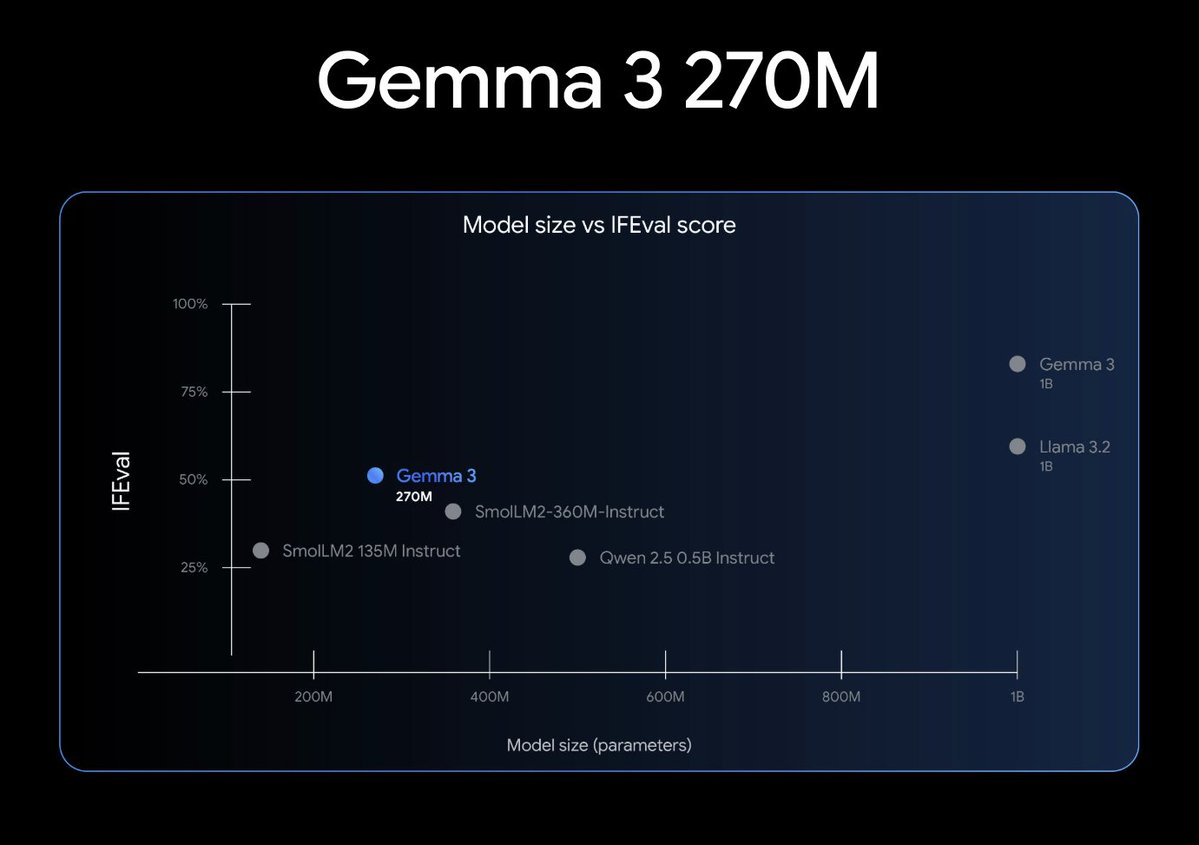

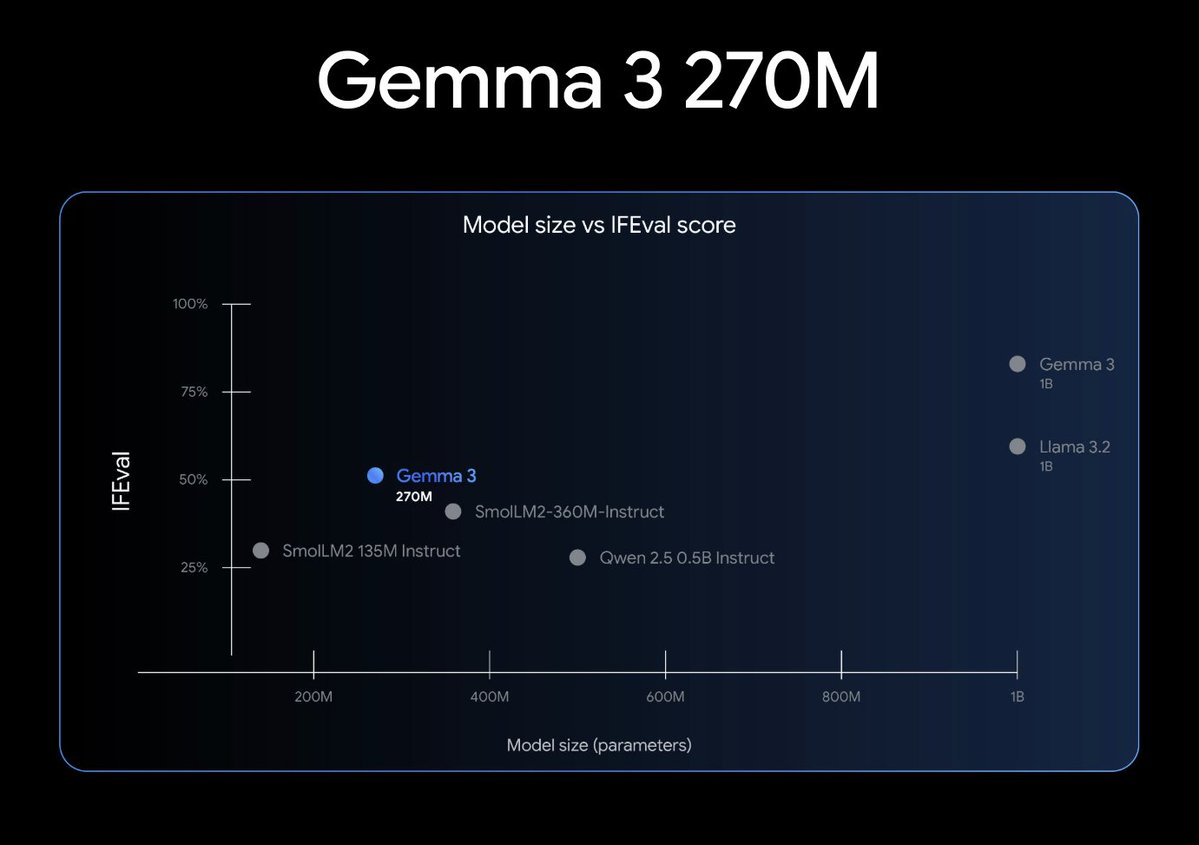

Introducing Gemma 3 270M, a new compact open model engineered for hyper-efficient AI. Built on the Gemma 3 architecture with 170 million embedding parameters and 100 million for transformer blocks.

- Sets a new performance for its size on IFEval.

- Built for domain and adoption and specialized fine-tuning.

- Uses just 0.75% battery for 25 conversations on Pixel 9 Pro.

- Instruction-tuned and base model.

- INT4 Quantization-Aware Trained checkpoints.

https://developers.googleblog.com/en/introducing-gemma-3-270m