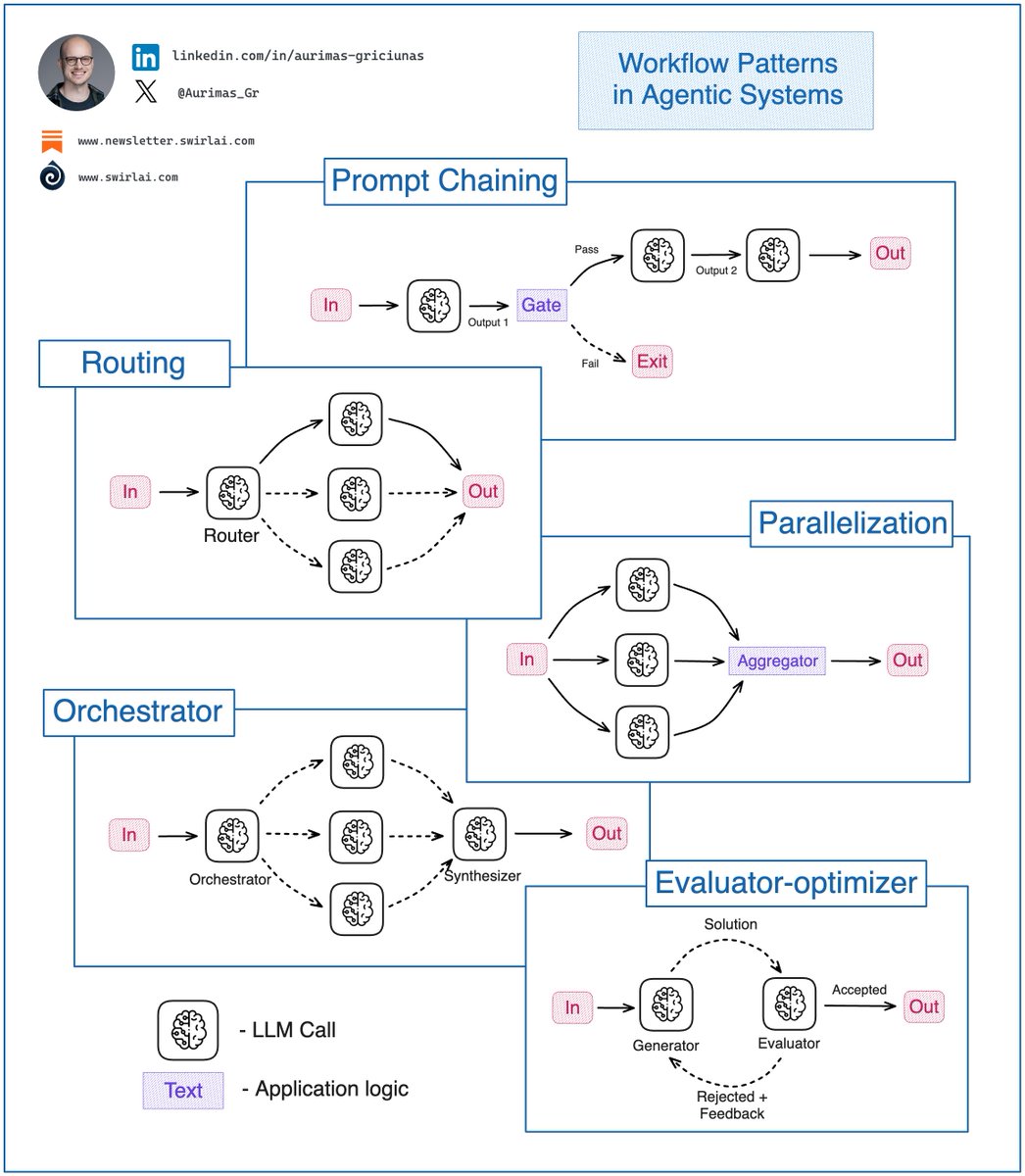

You must know these 𝗔𝗴𝗲𝗻𝘁𝗶𝗰 𝗦𝘆𝘀𝘁𝗲𝗺 𝗪𝗼𝗿𝗸𝗳𝗹𝗼𝘄 𝗣𝗮𝘁𝘁𝗲𝗿𝗻𝘀 as an 𝗔𝗜 𝗘𝗻𝗴𝗶𝗻𝗲𝗲𝗿. If you are building Agentic Systems in an Enterprise setting you will soon discover that the simplest workflow patterns work the best and bring the most business value. At the end of last year Anthropic did a great job summarising the top patterns for these workflows and they still hold strong. Let’s explore what they are and where each can be useful: 𝟭. 𝗣𝗿𝗼𝗺𝗽𝘁 𝗖𝗵𝗮𝗶𝗻𝗶𝗻𝗴: This pattern decomposes a complex task and tries to solve it in manageable pieces by chaining them together. Output of one LLM call becomes an output to another. ✅ In most cases such decomposition results in higher accuracy with sacrifice for latency. ℹ️ In heavy production use cases Prompt Chaining would be combined with following patterns, a pattern replace an LLM Call node in Prompt Chaining pattern. 𝟮. 𝗥𝗼𝘂𝘁𝗶𝗻𝗴: In this pattern, the input is classified into multiple potential paths and the appropriate is taken. ✅ Useful when the workflow is complex and specific topology paths could be more efficiently solved by a specialized workflow. ℹ️ Example: Agentic Chatbot - should I answer the question with RAG or should I perform some actions that a user has prompted for? 𝟯. 𝗣𝗮𝗿𝗮𝗹𝗹𝗲𝗹𝗶𝘇𝗮𝘁𝗶𝗼𝗻: Initial input is split into multiple queries to be passed to the LLM, then the answers are aggregated to produce the final answer. ✅ Useful when speed is important and multiple inputs can be processed in parallel without needing to wait for other outputs. Also, when additional accuracy is required. ℹ️ Example 1: Query rewrite in Agentic RAG to produce multiple different queries for majority voting. Improves accuracy. ℹ️ Example 2: Multiple items are extracted from an invoice, all of them can be processed further in parallel for better speed. 𝟰. 𝗢𝗿𝗰𝗵𝗲𝘀𝘁𝗿𝗮𝘁𝗼𝗿: An orchestrator LLM dynamically breaks down tasks and delegates to other LLMs or sub-workflows. ✅ Useful when the system is complex and there is no clear hardcoded topology path to achieve the final result. ℹ️ Example: Choice of datasets to be used in Agentic RAG. 𝟱. 𝗘𝘃𝗮𝗹𝘂𝗮𝘁𝗼𝗿-𝗼𝗽𝘁𝗶𝗺𝗶𝘇𝗲𝗿: Generator LLM produces a result then Evaluator LLM evaluates it and provides feedback for further improvement if necessary. ✅ Useful for tasks that require continuous refinement. ℹ️ Example: Deep Research Agent workflow when refinement of a report paragraph via continuous web search is required. 𝗧𝗶𝗽𝘀: ❗️ Before going for full fledged Agents you should always try to solve a problem with simpler Workflows described in the article. What are the most complex workflows you have deployed to production? Let me know in the comments 👇 #LLM #AI #MachineLearning